Profanity filters might sound completely passé or something your grandparents would want you to install in all their devices, however, they are fundamental for protecting your company while using Microsoft Teams or Webex.

History

Back when the internet still was the wild wild west, profanity filters were used in online forums and chat rooms for blocking words deemed offensive by the administrator or community. The big caudal of expletives quickly became overbearing and custom-programmed blockers were put in place in chat rooms and online videogames.

Once the internet became a massive tool for companies, hospitals and schools, the need for these blockers became even more evident.

Law

United States of America:

The First Amendment to the United States Constitution protects the freedom of speech against government censorship. This also applies to cyberspace thus having a minimal filtering of online content in the United States. However, due to complex legal and private mandates, the internet is regulated.

Direct censorship is prohibited by the First Amendment with some exceptions of obscenity such as child pornography. However, in the past few years, several acts were attempted to regulate children’s ability to access harmful material: The Communications Decency Act of 1996 and the Child Online Protection Act of 1998. Other similar acts were passed through, including the Children’s Online Privacy Protection Act of 2000 and the Children’s Internet Protection Act of 2000, protecting the privacy of minors online and also requiring K-12 schools and libraries receiving Federal assistance for Internet access to restrict minor’s access to unsuitable material.

European Union:

This is not only an American phenomenon, in Germany “The Federal Review Board for Media Harmful to Minors” (German: Bundesprüfstelle für jugendgefährdende Medien or BPjM) estates that “The basic rights of freedom of expression and artistic freedom in Article 5 of the German Grundgesetz are not guaranteed without limits. Along with the “provisions of general laws” and “provisions […] in the right of personal honor”, “provisions for the protection of young persons” may restrict freedom of expression (Article 5 Paragraph 2).”

This applies not only to physical media (printed works, videos, CD-ROMs etc.) but to distribution of broadcasts and virtual media too.

Digital Service Act:

The DSA is meant to improve content moderation on social media platforms to address concerns about illegal content. It is organized in five chapters, with the most important Chapters regulating the liability exemption of intermediaries (Chapter 2), the obligations on intermediaries (Chapter 3), and the cooperation and enforcement framework between the commission and national authorities (Chapter 4).

The DSA proposal maintains the current rule according to which companies that host other’s data are not liable for the content unless they actually know it is illegal, and upon obtaining such knowledge do not act to remove it. This so-called “conditional liability exemption” is fundamentally different from the broad immunities given to intermediaries under the equivalent rule (“Section 230 CDA”) in the United States.

In addition to the liability exemptions, the DSA would introduce a wide-ranging set of new obligations on platforms, including some that aim to disclose to regulators how their algorithms work, while other obligations would create transparency on how decisions to remove content are taken and on the way advertisers target users.

Dangers of lacking profanity filters in the workplace

Detecting offensive words and actions in the workplace before they occur is mandatory for providing a positive environment for your company. Filtering foul language and commands is extremely important in collaborative work.

NSFW material in the cloud

Whatever happens inside the channels of a company is a direct responsibility of the organization, therefore, whatever filth your employees might be saying or searching can lead to horrible results for everyone involved.

Right now there is a bunch of articles about how to surpass censorship and blockers at your job (i.e. “How Not To Get Caught Looking at NSFW Content on the Job”) and frankly, if any dangerous filth is found in the company’s server it could mean a whole investigation on every single computer.

NSFW content could be fatal for business as employers could also be paying to store questionable data in the corporate cloud.

Employees could use unstructured sync and share applications to upload unsuitable content into cloud storage servers. A recent Veritas report found that 62% of employees use such services.

Even worse, 54% of all data is “dark”, meaning it is unclassified and invisible to administrators. Video usually takes the more storage, which could lead to a significant extra cost for the maintenance of dubious content

Harassment

We are not just talking about a few mishaps, (you can filter those too!) We are talking about serious issues like harassment.

Managers can bully employees, employees could insult one another and the dreaded sexual harassment may threaten the safety of the workplace. When bullying, insults, and sexual harassment occur in the workplace, a hostile work environment is created damaging morale and productivity.

Organizations are liable to prevent any and all types of harassment

With profanity filters you can avoid these hurtful messages from ever reaching their destination and also flag and investigate repetitive offenders.

The economic costs of sexual harassment in the workplace:

Deloitte has published a paper about the costs of sexual harrasment in the workplace and stated that only in 2018, workplace sexual harassment imposed a number of costs.

The costs included in the model were: $2.6 billion in lost productivity, or $1,053 on average per victim. $0.9 billion in other costs, or $375 on average per victim.

the economic cost of workplace sexual harassment is shared by different groups.

The largest productivity-related costs were imposed on employers ($1,840.1 million), which is driven by turnover costs, as well as friction costs associated with short-term absences from work, and manager time spent responding to complaints. Government loses $611.6 million in taxes through reduced individual and company taxes.

The largest sources of other costs are the deadweight losses ($423.5 million), which are incurred by society.

The other major source of costs in this category are costs to the government for courts, jails and police; and legal fees for individuals.

Microsoft options

Microsoft is working on a new mechanism that filters threatening or rude messages sent by employees.

A new entry in the company’s roadmap promises an upgrade to the Microsoft 365 Compliance Center which may allow administrators to “detect threat, targeted harassment and profanities”

This is not only possible in English, but the trainable classifiers will be able to detect profanity in French, Spanish, German, Portuguese, Italian, Japanese and Chinese as well.

Another imminent update for admins to consider is titled alert exclusion in Microsoft 365 security center. The new feature aims to filter the number of security alerts issued by Microsoft Defender for Identity, so that users are only bothered by the ones that matter.

How to implement profanity filters for Microsoft Teams and Webex

While Microsoft is still working on the idea, the responsibility of keeping content safe lies on each company and lucky for them AGAT already offers Safe Content Inspection for Microsoft Teams

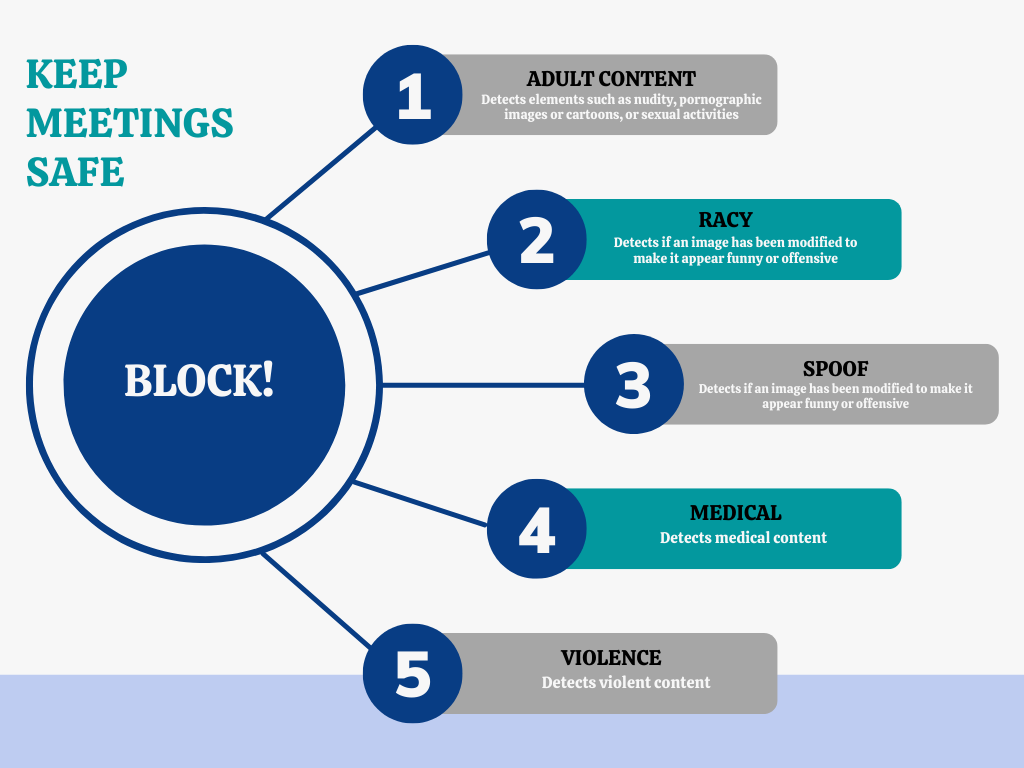

Using state of the art technology AGAT’s Safe Content inspection can detect unsafe content in all the important categories such as racy, adult, spoof, medical and violence.

- Adult Content: Detects elements such as nudity, pornographic images or cartoons, or sexual activities.

- Racy: Detects racy content that may include revealing or transparent clothing, strategically covered nudity, lewd or provocative poses, or close-ups of sensitive body areas.

- Spoof: Detects if an image has been modified to make it appear funny or offensive

- Medical: Detects medical content

- Violence: Detects violent content

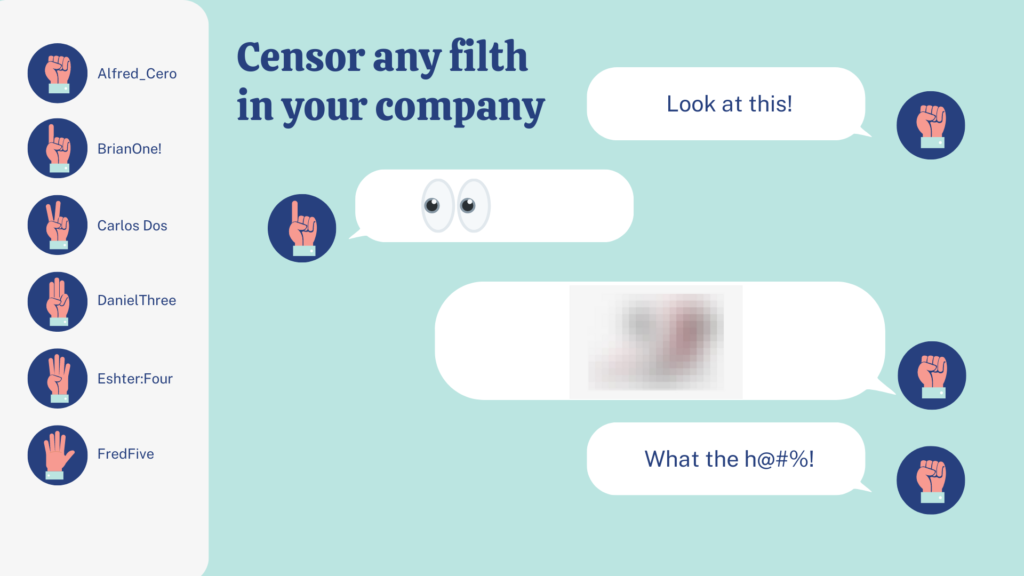

In real time, the software detects and blocks the content before it reaches its destination, no matter the format (Text or images). The AI matches the image content to the categories set and takes action by blocking, deleting or notifying on incidents notified.

Every incident identified raises flags with messages and pop-ups alerting the parties involved and/or the administrators, again, crucial to avoid any kind of repetitive offender or possible work harassment.

Detecting unsafe content is not an easy task, not everything is defined by clear rules, especially when dealing with images and videos. Addressing these issues require a serious machine learning involved to be able to detect awful content lurking around and to avoid false positives.

False positives:

Another problem that might be encountered is paying for a lackluster censor. The Internet is full of false positives, it needs a very competent AI to differentiate between safe content and utter filth.

Technology sometimes can’t keep up with the intricacies of human language. Your filter might work due to a blanket list of forbidden words, but what happens if a completely safe text contains a string (or substring) of letters that appear to have an obscene or unacceptable meaning?

You might face the Scunthorpe problem, where AI can detect words but can’t detect context, therefore it might block words that are completely safe and leave a lot of potential clients out of the loop.

For example, the Scunthorpe problem is called like that because in 1996 AOL prevented the whole town of Scunthorpe, North Lincolnshire, England, from creating accounts

In the early 2000s, Google’s opt-in SafeSearch filters made the same error, preventing people from searching for local businesses or URLs that included Scunthorpe in their names.

It might seem silly but this tiny mistake can make a company lose clients and money.

And what about images? There is a popular game on the internet where you have to guess if you are looking at a blueberry muffin or a chihuahua. As you can see, it might be difficult even for humans, so how can an AI keep up?

You need to be able to regulate how moderate you want your filter to be, i.e. from something absolutely pornographic to peoples faces, and you can do that by aplying the desires filters to AGAT’s Safe Content Inspection.

Safe Content Inspection

Safe Content Inspection was designed to help companies and organizations achieve a level of regulation and ethics needed to operate business as it should be done.

As of today we’re on the way to develop this feature further to include video inspecting and soon more UC platforms like Slack, Skype For Business and Zoom, so stay tuned for more updates.

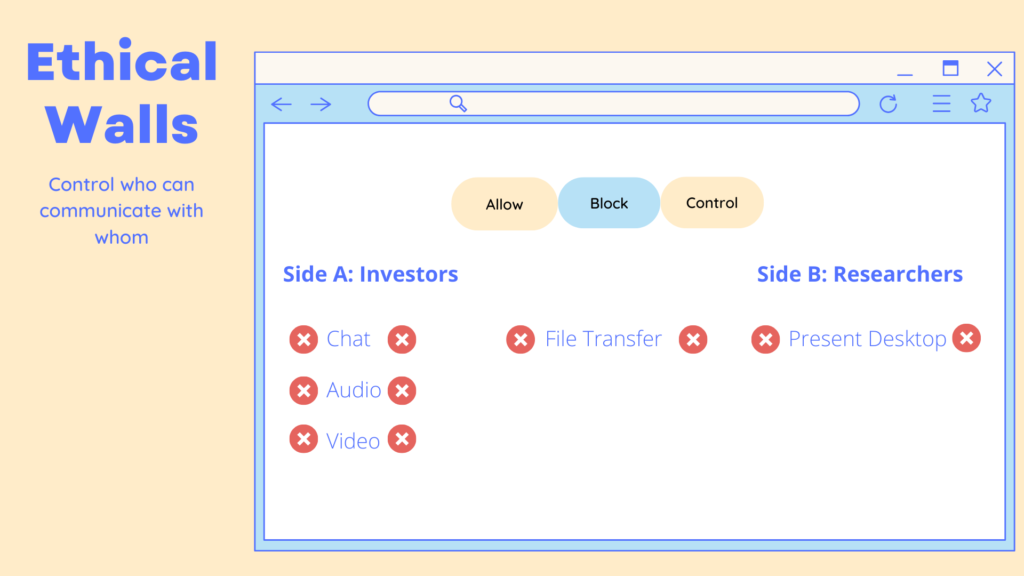

For more information about AGAT’s Real Time DLP and Ethical Walls contact us today!