Due to the COVID-19 pandemic, many companies had to restructure the way they worked almost overnight. Suddenly data that was protected by the organization’s regulations and contracts had to leave the company in order to work with it. That is the reason why FINRA extended all its compliance regulations to the internet space, establishing strict cloud governance standards and making cybersecurity a must.

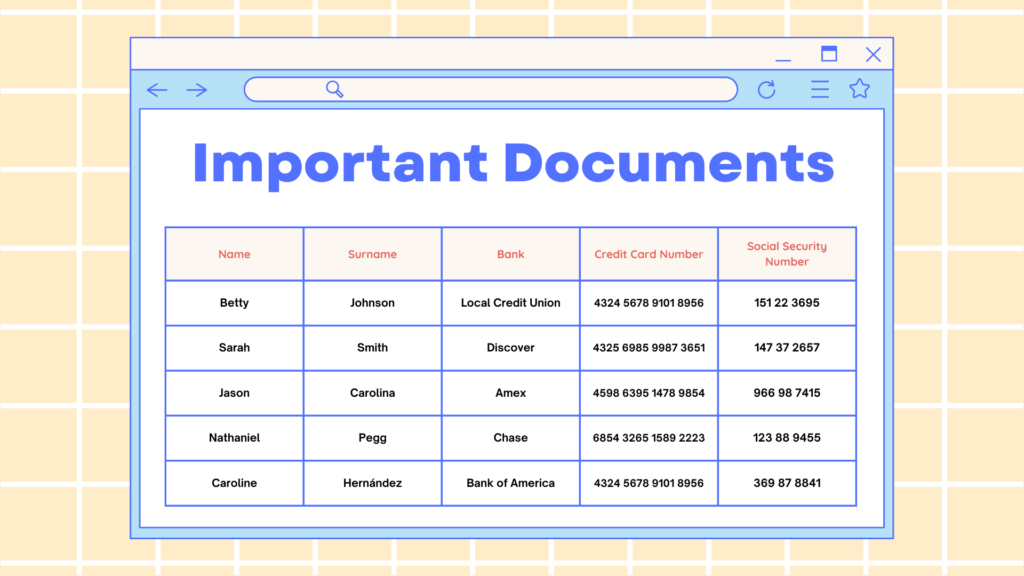

Insider threats to enterprise data are a permanent cause of concern since they can impart a huge amount of destruction on a business, especially in the financial services sector. A simple mistype by an employee with privileged access can be just as damaging as a compromised employee looking to make a quick buck. Financial institutions face the second highest breach costs among targeted industries.

Table of contents

- What is FINRA?

- What does FINRA do?

- Rules regarding information barriers

- Rules regarding data loss prevention (DLP)

- Rules regarding archiving and data recovery

1- What is FINRA?

The Financial Industry Regulatory Authority (FINRA) is a private, nonprofit American corporation that acts as a self-regulatory organization (SRO). Its mission is to set forth rules and regulate stockbrokers, exchange markets and broker-dealer firms, keeping the U.S. markets safe and fair. FINRA is the successor to the National Association of Securities Dealers, Inc. (NASD) as well as the member regulation, enforcement, and arbitration operations of the New York Stock Exchange.

The US government agency that acts as the ultimate regulator of the US securities industry, including FINRA, is the US Securities and Exchange Commission (SEC). Although FINRA is not a government organization, it does refer insider trading and fraud cases to the SEC, and if you fail to comply with FINRA rules, you may face disciplinary actions, including fines and penalties that are set to deter financial misconduct.

2- What does FINRA do?

- Oversees all securities licensing procedures and requirements for the United States.

- It’s responsible for governing business between brokers, dealers, and the investing public.

- Examines firms for compliance with FINRA and SEC rules.

- Performs all relevant disciplinary and record-keeping functions.

- It encourages member firms to secure their financial data and execute transparent transactions.

- Delivers steps defining accurate cybersecurity goals.

- It fosters transparency in the marketplace

Is your company compliant? You must, among other things, make sure that digital data is immutable and discoverable and that the access and usage of data can be restricted, regulated and audited*. This is where AGAT’s SphereShield software can help.

3- Rules regarding Information Barriers

In a few words, financial institutions are subject to regulations that prevent employees in certain roles from communicating or collaborating with employees with other specific roles. Why is this? because there are conflicts of interest involved, and if they exchange sensitive information there can be severe consequences.

A research analyst provides information to investors, they gather data around possible investment opportunities. Their increasing popularity expanded their influence on the price of securities: they give ratings that, if good, can make the price of an asset go way up. In parallel, a slight disfavorable change in their ratings can make prices drop. That’s why, to maintain a fair marketplace, research analysts cannot disclose ANY information they collected before an official public release.

The practice of information barriers has been expanded over recent decades to prevent those communications and risky information flows and to avoid insider trading, protecting investors, clients, and other key stakeholders from this wrongful conduct. FINRA Rules 2241 and 2242 require organizations to establish policies and implement information barriers between roles involved in banking services, sales, or trading from exchanging information and communicating with research analysts.

– How to comply with FINRA information barriers requirements

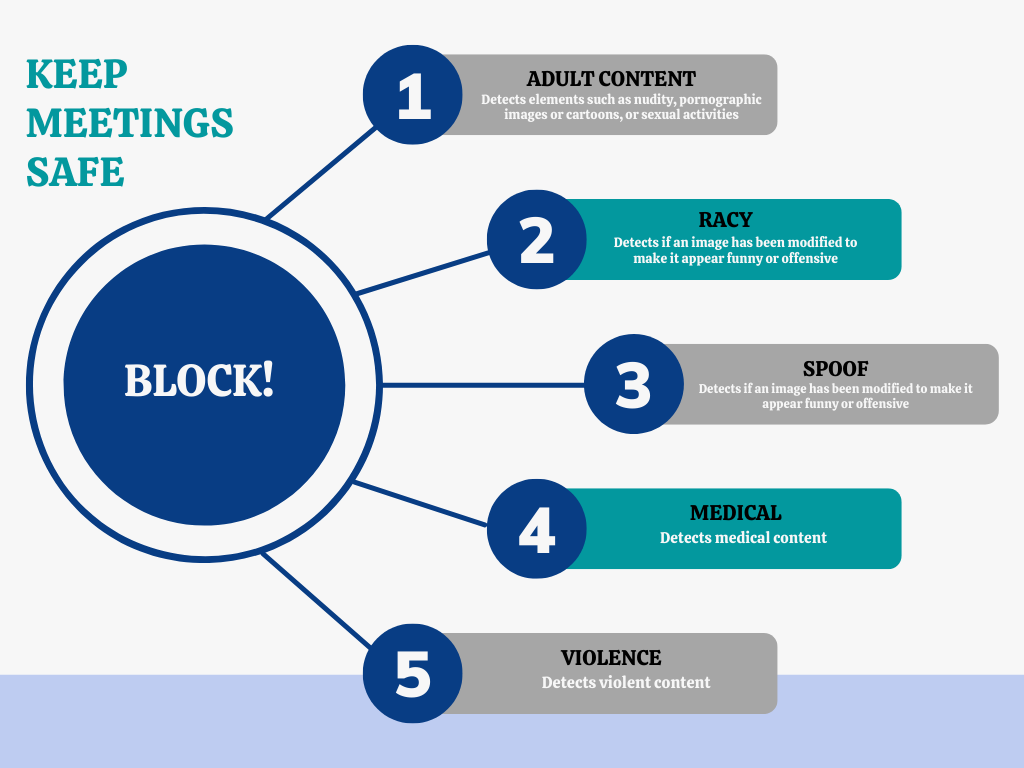

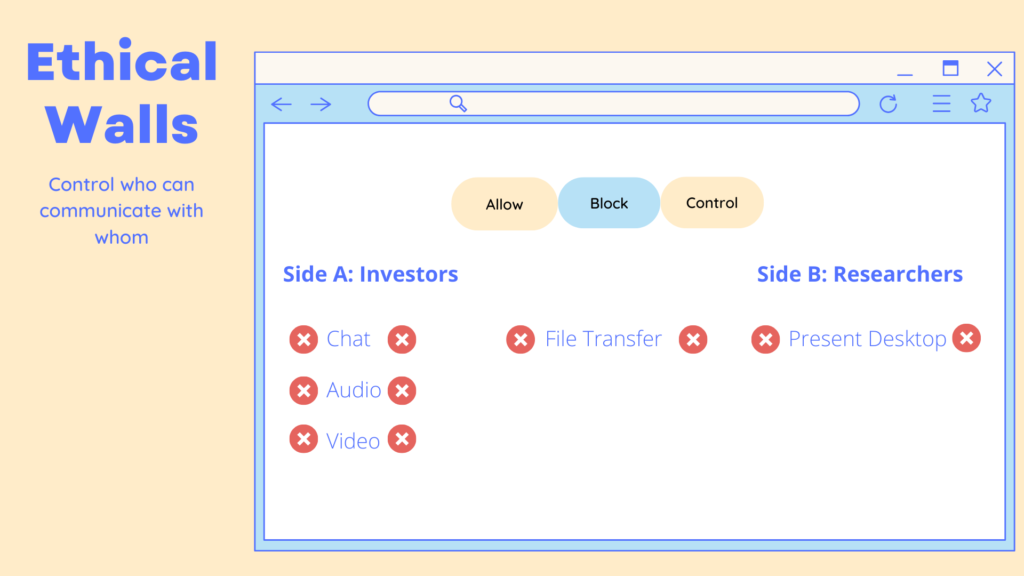

Agat’s SphereShield offers granular control over users/groups engaging in communications both within other areas of the company or with external organizations. It also includes independent control for different kinds of actions: instant messaging, audio, video, conferences, desktop sharing and file transfer.

So, let’s say a user identified as a Research Analyst wants to communicate with someone from a restricted area: a well implemented information barrier will fully block that possibility.

4- Rules regarding data loss prevention (DLP)

Firms must put robust policies in place for employees to know which sensitive information they cannot disclose, and also monitor them for suspicious activities that hint at possible misconducts. FINRA rules 3110/3013 explicitly mandate analyzing all electronic employee communications.

Clearly, reading all emails and listening to all voice calls is just not possible, but there are technologies that can actively transcribe, analyze, and monitor communications flagging any suspicious behaviors or activities. As an extra step, there’s software that can assist a firm to turn surveillance from reactive monitoring (that means, addressing employees missteps after the fact) to a proactive rule creation approach. This allows for risks to be identified, managed, and mitigated before information breaches or other incidents occur.

– How to comply with FINRA DLP requirements

AGAT’s DLP engine does real-time inspection of content, being capable of blocking or masking all data that is defined as sensitive before it reaches the cloud or is sent to external users. Firms can use it to prevent information leakages and insider trading offenses from happening, but it will also help them identify communication red flags to make risk assessments and train personnel.

5- Rules regarding archiving and data recovery

Examining a company’s books and records to make sure they are up to date and accurate is a significant component of FINRA industry inspections. FINRA establishes in its rules that access to all the records they might need to audit has to be accessible easily and promptly.

FINRA rules 4511, 2210 and 2212 are the rules on storage and recordkeeping, stating that all organizations must preserve their records and books in compliance with SEC Rule 17a-4. This includes ensuring the easy location, access, and retrieval of any particular record for examination by the staff of the Commission at any time. This rule applies, and has specific notes to electronic storage, like accurately organizing and indexing all information.

– How to comply with FINRA eDiscovery requirements

An eDiscovery search feature isn’t an ordinary content search tool. It provides legal and administrative capabilities, generally used to identify content (including content on hold) to be exported and presented as evidence as needed by regulatory authorities or legal counsels.

The eDiscovery solution from SphereShield allows for data to be immediately available to any regulatory organizations or commissions by giving advanced search capabilities to quickly retrieve and export data. This solution can also be integrated to other existing eDiscovery systems.